Over the past few weeks, I’ve had cause to do some thinking about Voice Assistance Technology, specifically what kind of ‘personality’ these things should have. When the topic was first raised, my instinctive response was that it was the wrong question. It took some further mulling and conversation to work out exactly why.

TL;DR: in order for trust – and, by extension, a positive relationship – to exist, there must be a shared understanding of reality. Talking about personality doesn’t address the fact that users of voice assistants don’t really know what they can and can’t do, let alone how they do it. Personality characteristics are to some degree moot; it’s ontological characteristics that we need to focus on first.

When asked in an interview last month what kind of ‘personality’ a (technology-driven) assistant should have, I said:

“Helpful, open, transparent, flexible, responsive… but these are less personality characteristics; these are more like ontological characteristics. There is a certain honesty about it: this is what I am, I am a piece of technology, what would you like?”

In a follow-up a few weeks later, I was pointed to this article by Tracey Follows. In it, she also references ontology as a priority when thinking about technology. I was asked: “Why ontology? Why now?” I hadn’t read Tracey’s piece prior to my interview, but I found myself nodding along. This phrase in particular resonated:

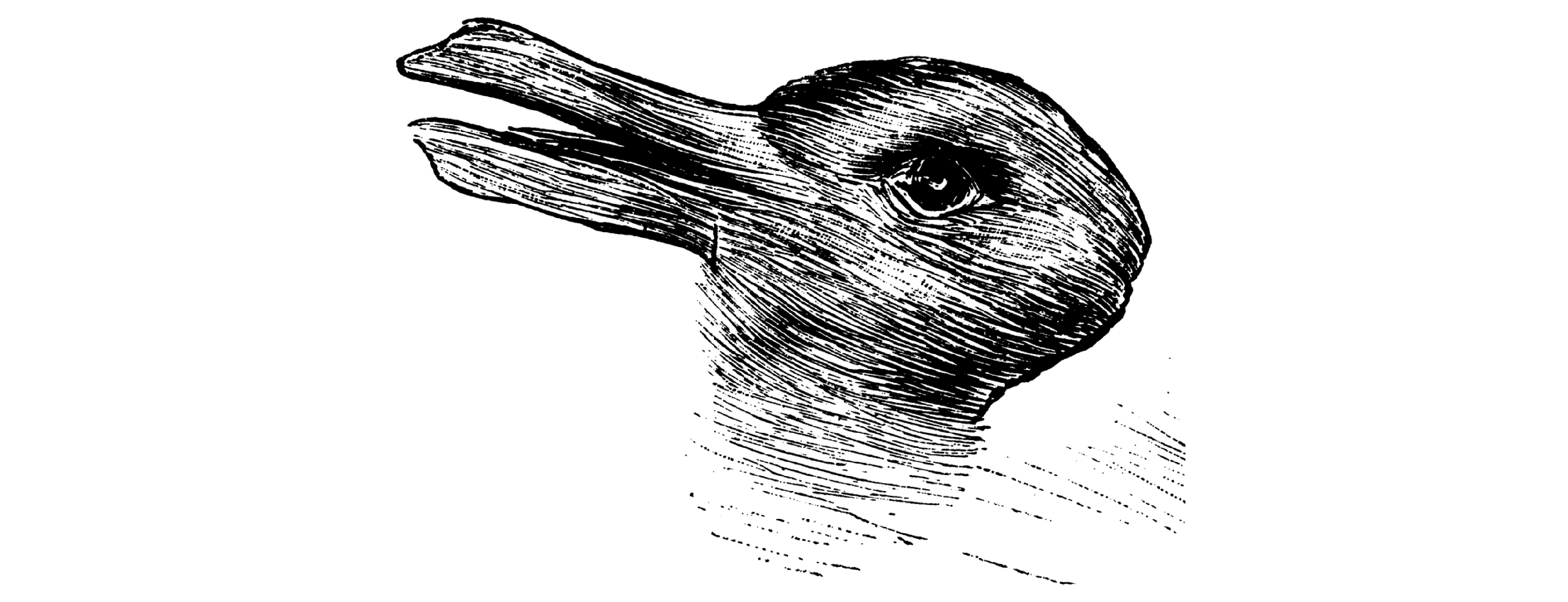

“Why do we want to anthropomorphise these machines? Will that really make for a better human-machine relationship?”

Indeed.

For us humans, trust isn’t based on personality, though personality can help to deepen or augment it. The foundation of trust is a shared understanding of reality. For a while in the tech world, context was king, and now it’s clarity. That’s because there used to be less ambiguity around what technology was delivering to people – it was largely driven by content and simple interactions, and the tech was designed to organise or simplify that content and those tasks so that humans could engage more easily or efficiently. Contextualisation was the key to doing this well. Now we increasingly rely on technology to do more complex, subtler things: to make decisions on our behalf like sorting, sifting and curating content, predicting our journeys, needs and desires, to learn how we live and help us manage our homes. That starts to get pretty slippery, and humans aren’t comfortable when we don’t understand what we’re dealing with. Anthropologically, it’s one of the underlying drivers of tribalism and xenophobia – if we can’t understand the motivations of the Other then we can’t trust them.

An analogy: when we meet other people, there are a whole host of cues that tell us about their motivations, culture, capabilities, and so forth. If a person is to be your assistant, you can tell a lot about what they’re capable of through hundreds of subtle cues like physical fitness, behaviour, speech patterns and so on, and beyond that you can ask them about their experience, comfort level or capabilities with respect to various tasks. This baseline reality will be the foundation of your relationship. Based on this shared understanding, you negotiate a set of expectations and then you know where you stand. Provided those expectations are met, the relationship will be a positive one. Personality is not irrelevant but it’s also not the heart of the matter: if the other party can’t do what you need them to do, or says one thing and does another, then it doesn’t really matter how well you get on.

Bringing voice assistance tech into the home or otherwise into personal life is challenging because there’s no shared reality to start from. Even in the industry, most of us only have a fuzzy idea of what the tech can and can’t do. And in the public at large, voice assistants are starting to creep people out – it turns out we aren’t comfortable with tech listening to everything we say and do, not least because we don’t really know how it works or what it’s doing with what it’s hearing. The only way to find out about its capabilities is trial and error, or reading instructions/ newsletters/ support. Nobody ever reads the manual, and I’d be surprised if Alexa’s emails have a high read rate. The ontological question, “what is this thing, really?” needs to be addressed before we can even begin to ask ourselves a relationship-building question like, “what can this thing be/ do for me?”

When a user understands what a thing can and can’t do, they can make better decisions about the role they want it to play for them. This is what I’d like to see technology companies do better – assist the user in negotiating the role of the object by making its capabilities – and limitations – more transparent.

If we focus on that, maybe we’ll discover that anthropomorphisation is moot. Or maybe there should be different personalities for different roles – you don’t necessarily want a nanny that talks like a party planner, or vice versa. Or maybe you do.

While I do understand that companies who are trying to make connections with customers will be drawn to the idea of personality as solving their problems, I think the more pressing issue is expectation management, and that’s about ontological characteristics. The more superficial questions of formality, style and so forth are secondary and should support a combination of the ontology and a user’s decisions. That’s a solid basis for a positive relationship, and a key to both ethical and commercial sustainability.